Most organizations segment their training content by job function and call it role-based. Sales sees one playlist, engineering sees another, customer success sees a third. The content varies by audience. The program design does not.

This approach fails quietly. Learners complete modules that feel generic. Managers cannot track competency development by function. L&D teams spend more time maintaining content catalogs than designing learning experiences that match what each role actually requires.

The gap between content segmentation and genuine role-based training is structural. Closing it requires competency mapping, differentiated path design, role-specific assessment, governance, and measurement that operates at the role level. This guide covers each step in the sequence implementation demands.

What Role-Based Training Is (and What It Is Not)

Role-based training is a program design methodology that aligns learning paths, assessments, and feedback systems to the specific competencies each job function requires. It goes beyond sorting content by department or filtering a course catalog by team name.

The distinction matters because most implementations stop at content segmentation. An LMS assigns different courses to different user groups. Reporting shows completion by team. The surface-level metrics look role-specific, but the underlying experience is identical: same progression logic, same assessment format, same feedback structure, same evaluation criteria applied across every track.

Genuine role-based programs operate differently. They structure learning sequences by competency dependency, not by topic relevance. They design assessment instruments that reflect what each role needs to demonstrate. They build feedback loops calibrated to the skills each function develops.

When these structural elements are missing, the program defaults to what it actually is: a filtered content library. Learners recognize the difference. Completion rates may hold steady, but the connection between training and on-the-job performance remains weak. Different content is not the same as different design.

Why Most Role-Based Programs Fail Before They Start

The most common failure pattern starts at the design stage. Teams build programs around job titles instead of competencies. Job titles are organizational labels that describe reporting structure and hierarchy. They do not specify the skills, knowledge, and behaviors someone needs to develop.

Two people with the title "Product Manager" at different companies may need fundamentally different training. One focuses on customer discovery and rapid validation. Another focuses on cross-functional alignment and roadmap governance. The title is identical. The competency requirements diverge sharply.

The second failure pattern is over-segmentation. Organizations create a separate track for every job title, producing a matrix of learning paths no team can sustain. Content becomes outdated within months. Assessment criteria drift from current role requirements. Facilitators spread thin across too many parallel programs.

Effective programs start by identifying where competencies genuinely diverge and where they overlap. Shared foundations reduce duplication. Role-specific branches address only the skills that truly differ by function. Three well-maintained tracks consistently outperform twelve neglected ones.

The third pattern is uniform assessment. When every role track ends with the same quiz format and the same passing criteria, the role-based framing is cosmetic. Learners recognize this quickly. Engagement drops because the evaluation does not reflect the specific skills their track develops.

How to Map Competencies to Roles

Competency mapping is the foundation every subsequent design decision builds on. Without it, learning paths are organized by topic rather than functional need, and the connection between training and job performance breaks at the first structural level.

Start with job analysis, not job descriptions. Job descriptions are HR documents written for recruitment. They list responsibilities and qualifications but rarely specify the trainable skills and behaviors required for proficiency. SHRM's job analysis toolkit provides a structured approach to identifying genuine role requirements through task analysis and competency identification.

A thorough training needs assessment at this stage reveals what each role actually demands through interviews with managers, observation of high performers, and review of performance data. Understanding the difference between competency and capability also matters here: competencies are specific, measurable, and trainable, while capabilities are broader and more adaptive. Role-based training targets competencies because they can be mapped to learning objectives, assessed with rubrics, and tracked over time.

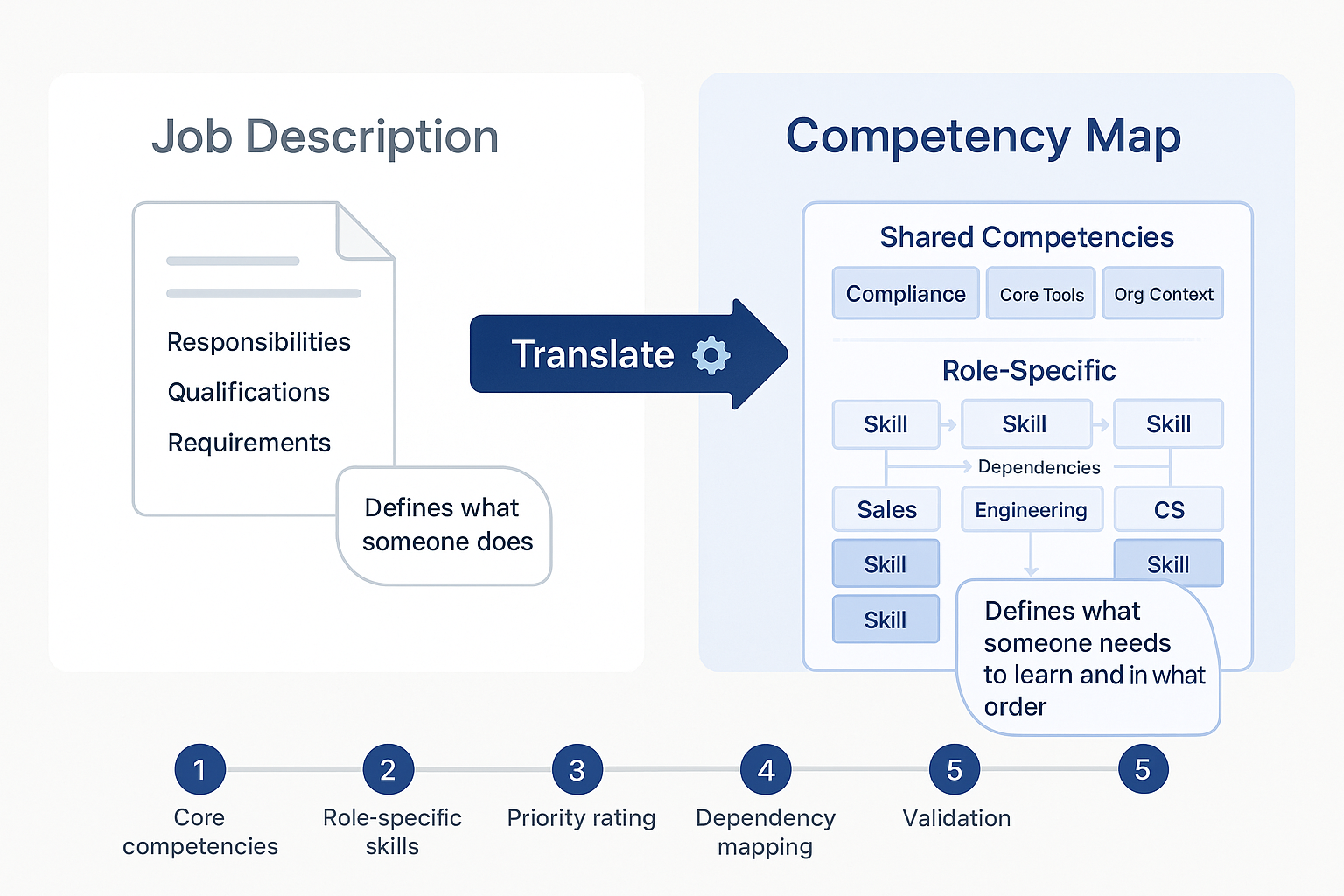

The output is a competency map: a structured document listing both shared foundational competencies (common across multiple roles) and role-specific competencies (unique to each function). Established frameworks like ATD's capability model can guide this process. Building the map follows a clear sequence:

- Identify 3-5 core competencies shared across all target roles

- Identify 3-7 role-specific competencies for each distinct track

- Rate each competency by priority: critical for performance vs. developmental

- Map dependencies between competencies to determine learning sequence

- Validate the map with functional managers and subject matter experts

Dependency mapping in step four drives your learning path structure. If consultative selling depends on product knowledge, the path must enforce that order. If project risk assessment depends on stakeholder analysis skills, the sequence reflects that relationship.

A skills matrix can serve as a practical tool for visualizing where competencies overlap across roles and where they diverge, making the mapping process more systematic.

Competency Maps vs. Job Descriptions

A competency map is not a reformatted job description. Job descriptions define what someone does. Competency maps define what someone needs to learn, in what order, and to what standard.

Job descriptions list responsibilities: "Manage client relationships." Competency maps decompose that into trainable components: discovery call methodology, account planning frameworks, escalation protocols, renewal strategy. Each component can be assessed independently, assigned to a specific position in the learning path, and evaluated with role-appropriate criteria.

Organizations that skip this translation and build training directly from job descriptions end up with programs that cover topics but do not develop competencies. The content exists, but the learning architecture lacks the specificity needed to drive measurable skill development.

Designing Differentiated Learning Paths

With competency maps defined, the next step is translating them into structured learning pathways. A learning path is not a content playlist. It is a sequenced program with prerequisites, progression gates, and intentional module ordering based on competency dependencies.

Start with the shared foundation. Most multi-role programs have competencies common across all tracks: organizational context, compliance, core tools, baseline professional skills. Building this shared layer follows the same principles as designing effective training programs, where structure and sequencing drive outcomes more than content volume.

After the shared foundation, paths branch by role. Each branch follows its competency map, with modules ordered by dependency. A technical track might sequence from tool proficiency to process application to architectural decision-making. A customer-facing track might move from product knowledge to communication frameworks to account strategy.

This is where customizing learning paths by role becomes operational. The design principles that make differentiation work:

- Enforce prerequisites. Learners cannot skip modules or self-select their sequence. Progression gates ensure each competency builds on the one before it.

- Keep branches focused. Role-specific content covers only the competencies that genuinely differ across functions. Shared material stays in the foundation.

- Design for cohort delivery. Paths perform best when groups progress together on a defined schedule, creating accountability and context that self-paced consumption cannot replicate.

- Build update points. Include specific positions in each path where content can be refreshed without restructuring the entire sequence.

For employee onboarding programs, the shared-foundation-plus-branching model is especially effective. New hires receive organizational context through shared modules, then diverge into role-specific tracks from the first week.

Assessment Design for Role-Based Programs

Assessment is where role-based programs either prove their value or expose their superficiality. If every track uses the same quiz format with identical passing criteria, the differentiation exists only in the content layer. The evaluation layer treats all roles identically, and the program cannot measure whether it develops the specific competencies each function targets.

Role-specific assessment means designing evaluation instruments that match the competency profile of each track. Competency assessment practices provide the foundation: linking evaluation criteria directly to the competencies defined in each role's map.

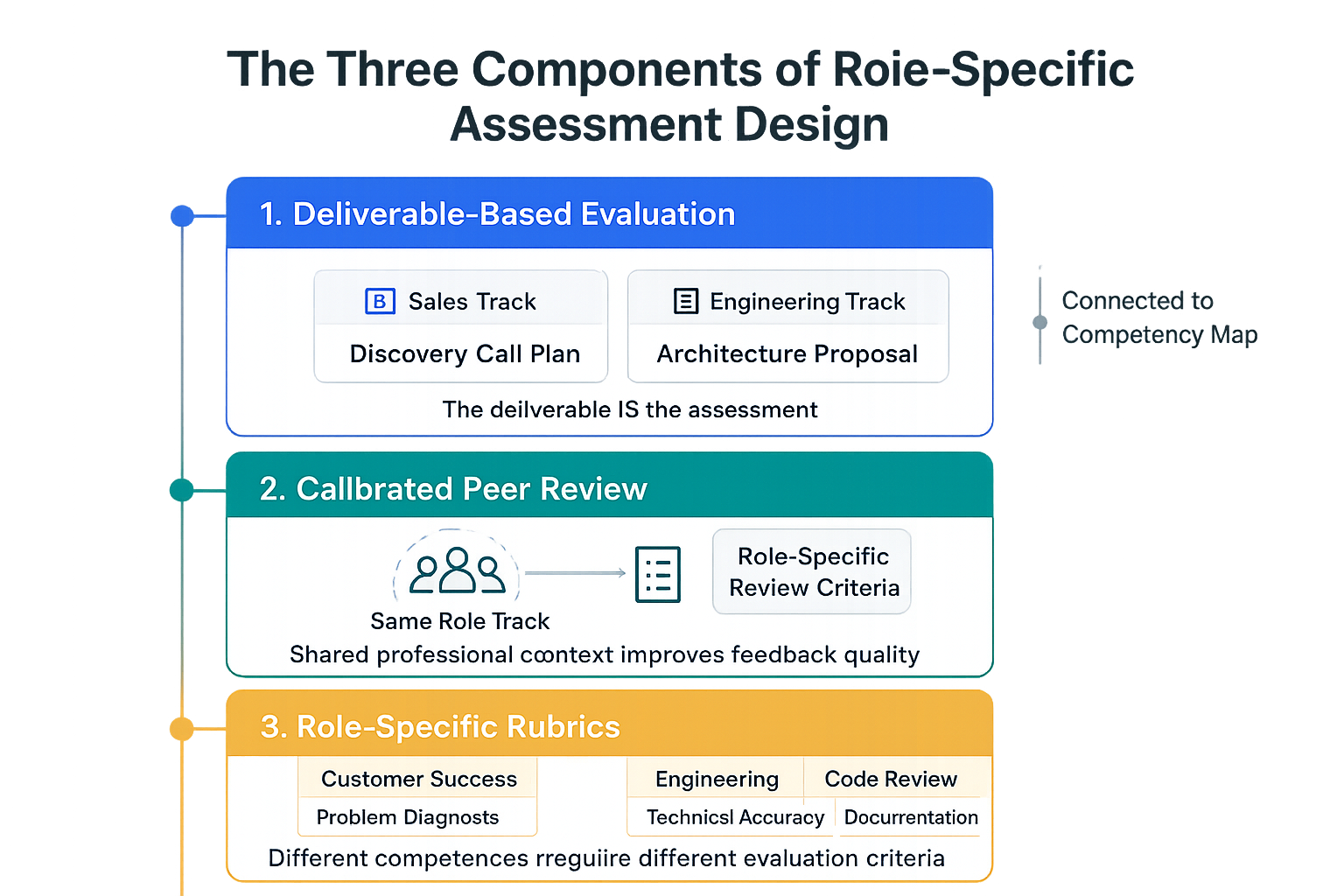

Three components make role-specific assessment operational:

- Deliverable-based evaluation. Learners produce work artifacts relevant to their function. A sales track submits a discovery call plan. A technical track submits documentation or an architecture proposal. The deliverable itself is the assessment, and its quality is measured against role-specific criteria.

- Calibrated peer review. Peer feedback frameworks within role tracks carry more weight because reviewers share professional context. They can evaluate work against role-relevant standards rather than generic quality indicators. Review criteria must match the competencies each track develops.

- Role-specific rubrics. Each track needs its own evaluation rubric with dimensions tied to its competency map. Generic rubrics applied uniformly across roles defeat the purpose of differentiated design.

Building Role-Specific Rubrics

A rubric for a role-based program connects each evaluation dimension to a specific competency from the role's map. A customer success rubric might evaluate problem diagnosis accuracy, solution recommendation specificity, communication clarity for non-technical audiences, and follow-up planning depth.

An engineering rubric for a parallel program might evaluate technical accuracy, documentation completeness, edge case identification, and code review feedback quality.

Both rubrics measure professional competency. Neither would serve the other role effectively. This specificity is what connects assessment to actual job performance and makes training measurably differentiated rather than superficially labeled.

Scaling Role-Based Programs Across the Organization

Multi-role programs become unsustainable without governance. Governance is not bureaucratic overhead. It is the operational structure that prevents path quality from degrading as the program grows.

Assign ownership per role track. Each track needs a designated owner accountable for content currency, assessment calibration, learner progression, and outcome reporting. Without clear ownership, tracks drift. Content ages out. Assessment criteria disconnect from current requirements. Facilitators default to generic delivery because no one enforces role-specific quality.

A training matrix helps visualize ownership assignments and track status across multiple role paths, making governance visible rather than abstract.

Three structures support sustainable scaling:

- Track-level ownership. One person or team owns each role track end-to-end: content, assessment, facilitation, and reporting.

- Shared module coordination. Foundational modules used across tracks need synchronized updates. A compliance content change affects every role path simultaneously.

- Periodic competency review. Roles evolve. Competency maps require regular validation against current job requirements, performance data, and feedback patterns from active cohorts.

Permission design matters at scale. Track owners should access only their relevant content and learner data. A sales training lead should not navigate engineering tracks to find their cohort. Role-segmented platform access reduces noise and improves response time.

The most common scaling failure is organizational, not technical. Programs that launch well often deteriorate because ownership was never formally assigned. The original designer moves on, tracks run without governance, and quality falls until learners stop engaging.

Measuring Performance Impact by Role Track

Measurement must operate at the role level. Aggregate completion rates, satisfaction scores, and quiz averages mask the information that matters: whether each track develops the competencies it targets.

A program with 80% overall completion might have one track at 95% and another at 55%. A program with strong average assessment scores might have one track with tight rubric calibration and another where evaluators default to generous marks. Only role-segmented reporting surfaces these disparities. Research from CIPD on workplace learning effectiveness consistently emphasizes the importance of function-specific measurement in training evaluation.

Measuring training effectiveness at the role level requires tracking these metrics per track:

- Completion rates by track, not aggregated across the program

- Assessment score distributions with variance analysis, not just averages

- Peer review quality measured by rubric adherence and feedback specificity

- Time to competency from enrollment to demonstrated proficiency

- On-the-job application indicators from manager feedback or performance review data

The feedback loop matters more than the initial snapshot. When peer reviewers in a specific track consistently flag the same skill gaps, the curriculum needs adjustment. When completion drops at a particular module, the content or its sequence position may need rework.

Platforms built for structured program delivery, like Teachfloor, support this kind of role-segmented reporting alongside learning paths and cohort management. But regardless of tool choice, the principle holds: measurement at the same level of specificity as the training design is what makes continuous improvement possible.

Role-based training is an architectural challenge. The organizations that build programs changing actual performance invest in competency-mapped paths, differentiated assessment, role-specific rubrics, deliberate governance, and measurement at the track level. The implementation sequence is non-negotiable: competency mapping before path design, path design before assessment, assessment before scaling, governance before long-term measurement. Each layer depends on the one before it, and shortcuts in early steps compound into structural problems that no amount of content quality can overcome.

Frequently Asked Questions

What is the difference between role-based training and job-specific training?

Role-based training designs complete learning programs with paths, assessments, and feedback systems around the competencies each function requires. Job-specific training programs typically refer to content relevant to a particular role but may lack the structural elements that make differentiated programs effective: sequenced prerequisites, role-specific rubrics, calibrated peer review, and track-level governance. The distinction is architectural, not topical.

How many role tracks should an organization create?

Start with the minimum where competencies genuinely diverge. Most organizations benefit from 3-5 well-maintained tracks rather than a separate path for every job title. Where roles share more than 70-80% of competency requirements, shared foundational modules with role-specific assessments are more sustainable than fully separate tracks. Fewer, well-governed tracks consistently outperform many poorly maintained ones.

How do you keep role-based programs current as roles evolve?

Conduct periodic competency map reviews with functional managers and high performers. Compare current requirements against the existing training path to surface gaps or outdated content. Assign track-level ownership so one person or team is accountable for currency. Use peer review patterns and assessment score distributions as early signals of where paths need updating before formal reviews confirm it.

What technology capabilities are required?

Effective programs need platforms supporting structured learning paths with prerequisites and progression gates, parallel cohort delivery across multiple tracks, differentiated assessment tools with custom rubrics and configurable peer review, role-segmented reporting, and permission management by track. Basic LMS platforms that only offer content tagging and user-role filtering cannot enforce the structural requirements that make this approach operational.

%201.svg)

.png)

%201.svg)